Pedagogical strategies for teaching Virtual Production pipelines

Published at SIGGRAPH Asia 2023 Educator’s Forum, Tokyo International Forum, Japan. https://dl.acm.org/doi/abs/10.1145/3610540.3627010

Written by: Gregory Bennett, Hossein Najafi, Lee Jackson

Abstract

The incorporation of LED walls and virtual production tools in the film industry is a recent development that has significant ped- agogical implications (BLISTEIN, 2020; FARID and TORRALBA, 2021). The use of LED walls combines physical and digital reali- ties, potentially reducing post-production time and resource usage (ONG, 2020). Virtual production can facilitate immediate on-set decision-making and mitigate the need for certain post-production adjustments (EPIC GAMES, 2020). MIT researchers posit that such practices may also reduce the carbon footprint associated with lo- cation filming (FARID and TORRALBA, 2021). Nonetheless, further research and innovation are needed to overcome any limitations and fully harness the potential of this technology. The utilization of Unreal Engine software and other real-time tools in visual effects education aligns with industry trends and enhances student preparedness for professional practice (FLEIS-CHER, 2020). It has been suggested that engaging students with these tools can foster an understanding of the virtual production pipeline, thus aligning the educational curriculum with evolving industry standards (BALSAMO et al., 2021).

As educators at Auckland University of Technology, we recog- nized the necessity of early integration of these paradigm-shifting tools into our curriculum to prepare students for impending and ongoing industry changes. In anticipation of procuring LED walls, we explored the potential of leveraging existing resources to initiate a pedagogical foray into the virtual production sphere. The avail- able resources encompassed a large green screen studio, a motion capture studio, and virtual reality (VR) headsets and trackers.

This paper offers two pedagogical responses that were deployed in courses situated in the final (third) year of undergraduate studies for an Animation, Visual Effects and Game Design Major, Bachelor of Design, at the School of Art and Design, Auckland University of Technology. One course is positioned within a motion capture minor and the other an option within a major capstone project framework. These two responses form case studies in the technical modifica- tion of existing teaching equipment and the need to push the limits of existing software and hardware resources within the attendant budgetary constraints of a tertiary education institution. This, in turn, is in the service of meeting shifting tertiary curriculum de- mands in response to a technologically fluid and future-focused industry.

Keywords

education, computer graphics, virtual production, motion capture, virtual reality, compositing

ACM Reference Format:

Gregory Bennett, Hossein Najafi, and Lee Jackson. 2023. Pedagogical strate- gies for teaching Virtual Production pipelines. In SIGGRAPH Asia 2023 Educator’s Forum (SA Educators Forum ’23), December 12–15, 2023, Sydney, NSW, Australia. ACM, New York, NY, USA, 10 pages. https://doi.org/10.1145/3610540.3627010

Context

For lecturers Hossein Najafi and Gregory Bennett this necessitated undertaking an adaptive and responsive approach to curriculum development and implementation in working with available hard- ware and software to replicate some of the technical and creative conditions of virtual production. The respective case studies include discussion of the following key challenges and concerns:

- Identifying what elements of virtual production can be re- produced in the absence of an actual LED

- The augmentation of existing technical facilities to facilitate an operative virtual production learning experience.

- The challenge of constructing of an effective techni- cal/creative brief that includes learning outcomes that cover appropriate technical processes and allow creative agency for students.

- New or emerging software tools that provide ‘added value’ to the student learning

Case Study 1

The integration of offline green screen filming with virtual production and real-time

This study presents one of the in- terim solutions is the integration of offline green screen filming with virtual production and real-time tools. The integration of of- fline green screen methods with real-time tools like Unreal Engine has amplified the creative capacities of virtual production. While green screens have been employed in film production for decades to facilitate the insertion of varied backgrounds or visual effects in post-production, the advent of real-time engines like Unreal have enhanced this process significantly. The key factor here is the inter- activity and the draft review potentials. Research conducted by KIM et al., 2021, found that this interactive and immersive environment not only increases the efficiency of the filming process but also enhances the overall performance of actors, as they can respond more accurately to their surroundings.

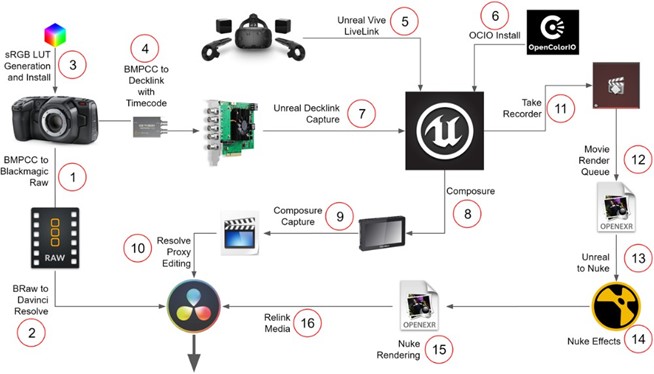

Figure 1: Offline virtual production workflow, retrieved from: https://vpifg.com/workflows/BURN/

In order to facilitate this, visual effects lecturer Hossein Najafi equipped a green screen studio with a variety of tools and tech- nologies. These included a high-performance GPU computer to host Unreal Engine, Decklink cards for timecode capture, VIVE Pro trackers, an URSA mini camera, and necessary interconnections between pipeline components. Figure 1 illustrates the composition of this technologically advanced pipeline. The succeeding section provides an examination of a final year student capstone project, serving as a case study to underscore the implementation and outcomes of employing virtual production tools in an educational setting.

“The Sanctioned” Capstone Project

Students Danielle Mclean, Alicia Brenk, and Betty Kennedy exam- ined a green-screen, single-camera, shot-oriented workflow for pre- recorded film. The method comprises two parts. First the equipment records internally at its highest quality, maximizing the resolution of the captured footage. And second a low resolution real-time ren- dered composite is recorded and viewable on-set during the shot. This lower-quality footage is immediately available for editing and includes all final visual effects, excluding any potential detailed compositing visual effects. After editing, the high-quality footage can be substituted using timecodes so then transitioning into a high- quality compositing stage in Foundry’s Nuke composting software for the final output. Utilizing an HTC VIVE VR tracking set-up, students attached HTC VIVE trackers to the camera to be able to track and record camera movements in 3D space and transfer it into Unreal Engine. (Figure 2 and Figure 3).

Figure 2: Visual effects student, Danielle Mclean, is tasked with capturing green screen footage. Simultaneously, a live feed of the camera movement is transmitted to Unreal En- gine, which then generates a low-resolution, real-time render for immediate and interactive evaluation.

Figure 3: VIVE tracker is attached to the camera which is mounted to a gimbal. The tracker captures camera movements for live feedback.

Figure 4: 24-camera Motion Capture Lab at AUT.

Figure 5: Faceware wireless head-mounted camera system.

This procedure enabled students to apply the same handheld camera movements from live footage onto their virtual environ- ment. Also, the live feedback facilitated fast decision making for positioning the lighting within the green screen studio.

Case Study 2

Live streaming motion capture data from a 24-camera Motion Analysis system into Unreal Engine.

Background. A Motion Capture Lab facility at Auckland Univer- sity of Technology (AUT) was established in 2014, with the aim of servicing teaching, research and industry collaborations. For body capture the lab features a Motion Analysis system with 24 Raptor-4 digital cameras operating at up to 200 fps at a full resolution of 2352 x 1728 pixels, and up to 10,000 fps at reduced resolutions. The capture volume is up to 6 x 6 x 3 meters and is capable of up to a 6-performer simultaneous capture. For facial motion capture the Faceware wireless head-mounted camera system is deployed and can be synced with body motion capture. The lab also includes an Insight Virtual Camera System (VCS) for real-time virtual camera tracking.

Figure 6: Insight Virtual Camera System (VCS) for real-time virtual camera tracking.

A Motion Capture Minor consisting of four courses as a study option throughout an undergraduate Bachelor of Design was in- augurated by lecturer Gregory Bennett when the lab was commis- sioned:

Year 1: Introduction to Motion Capture

- Introduces motion capture theory and practice with a focus on learning technical pipelines for body motion capture that is then deployed in the production of a 3D previsualization

Year 2: Visualizing Motion Capture

- Develops practical and conceptual skills for exploring a range of experimental and abstract visualizations of human move- ment data using 3D particle and simulation

Performance Capture

- Focuses on facial performance capture using Faceware hard- ware and software for the production of a digital character

Year 3: Motion Capture Project

- Building on accumulated knowledge and experience of mo- tion capture tools, concepts and applications, students will undertake a guided capstone

The Minor became a popular option for students studying ani- mation, visual effects and game design pathways, as well as some students from related disciplines such as creative technologies and computer science. The course content provides an in-depth introduction to all as- pects of motion capture including body and facial capture, and the virtual camera, within a pedagogical framework that applies the motion capture technical processes and techniques to creative briefs that focus on filmic visual storytelling and actorly performance. This enables the reinforcement of core moving image narrative principles, while also responding to ‘advice from industry advi- sors, namely that they are looking for graduates who understand the cinematographic storytelling context of an animation or vi- sual effects shot, or a MoCap motion editing task’ (BENNETT and KRUSE, 2015). For example, the foundation Motion Capture Minor course introduces the basic motion capture pipeline for body capture through a creative brief to produce a previsualization sequence based on interpreting a one-page script sample. This enables stu- dents to learn the motion capture production and post-production pipeline including performer capture, data clean-up, the application of data to digital characters and objects, motion editing, placement of characters in a digital environment, and the construction of a final edited sequence focusing on camera (framing and movement) and editing, in the service of creating an effective and engaging previsualization sequence.

Live streaming set-up

The emergence of Unreal Engine game engine software as an in- creasingly effective real-time rendering tool, and related features such as MetaHuman Creator, a 3D character-creation tool that ap- peared in 2021, prompted the development of the live streaming capabilities of the motion capture system by senior technician Lee Jackson. Jackson worked to enable three simultaneous data sets to be live streamed into Unreal Engine via Autodesk Motion Builder:

- bodily performance motion capture data (from Motion Analysis’ Cortex Motion Capture software)

- virtual camera motion capture data (from Motion Analysis’ Cortex Motion Capture software)

- facial performance data (from Faceware facial motion capture software)

This effectively enabled body and facial performance data to be streamed live to a MetaHuman character situated in an Unreal Engine environment, plus a tracked virtual camera operating in the same 3D virtual environment.

A live video feed of the real-time rendered scene from the virtual camera point-of-view was also viewable in the screen viewport of the virtual camera as well as a video screen on the wall of the motion capture lab.

The hardware set-up for this required two high-performance GPU computers, one to run the Motion Analysis Cortex motion capture software, and one to run both Autodesk Motion Builder and Unreal Engine. Custom Cortex software plugins enabled the streaming of virtual skeleton data from the body capture, and the virtual camera movement data into Motion Builder. Unreal Engine Live Link was then deployed to stream body data to drive the animation of a MetaHuman character, and camera movement data to drive a virtual camera in Unreal Engine. Faceware facial motion capture data was streamed separately via Live Link to drive the animation of the MetaHuman facial rig.

Once this capability was realized by Lee Jackson, and demon- strated to motion capture lecturer Gregory Bennett the decision was made to test this pipeline in the classroom. Given that this capability was a development out of an existing motion capture workflow, Bennett chose to implement it in the fourth and final motion capture course. This built on the previous knowledge of students now familiar with the established motion capture pipeline, extending their practice to encompass new software and pipeline frameworks that approached virtual production conditions.

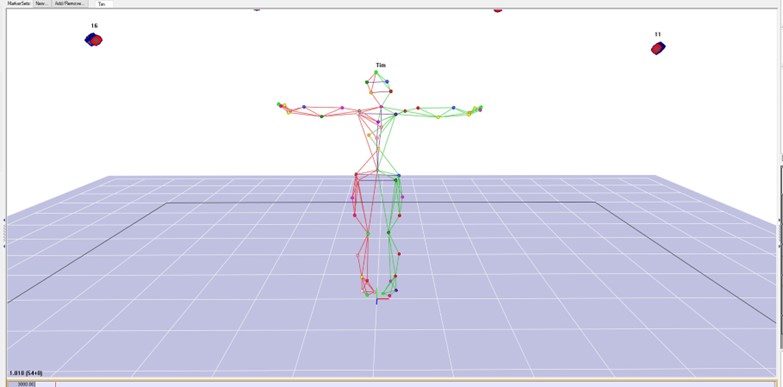

Figure 7: Motion capture data from live performer in Motion Analysis Cortex software application.

Figure 8: Motion capture data live streamed into Autodesk Motion Builder.

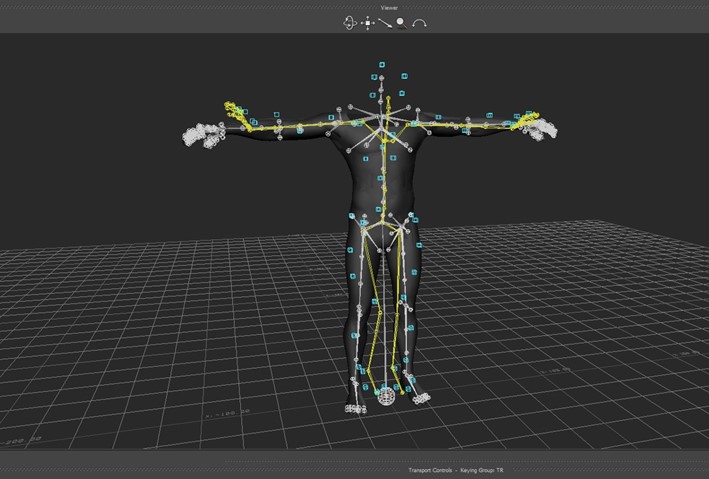

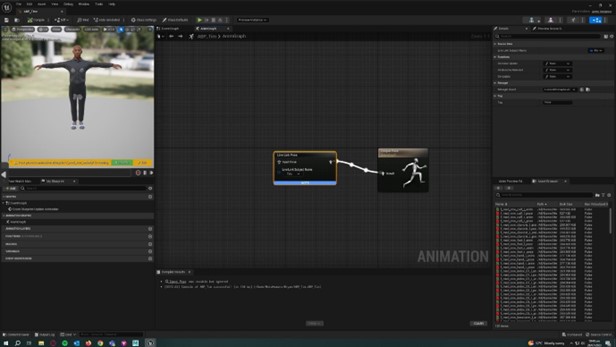

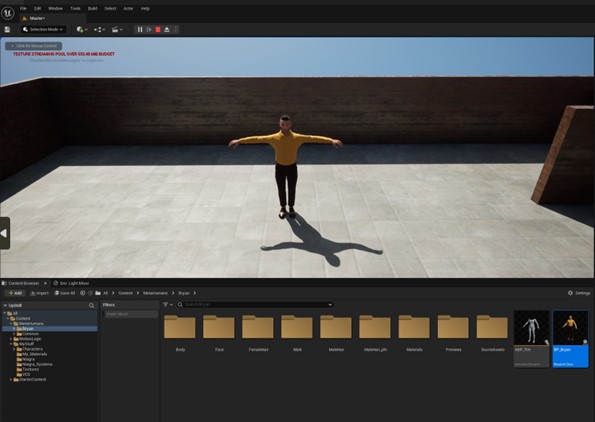

Figure 9: Unreal Engine Live Link deployed to stream body data to drive the animation of a MetaHuman character.

Figure 10: MetaHuman character in Unreal Engine.

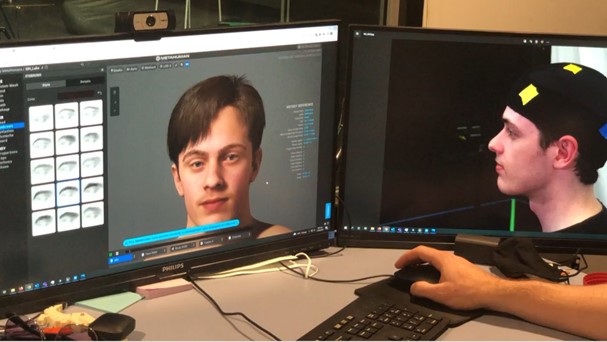

Figure 11: Faceware facial motion capture data streamed sep- arately via Unreal Engine Live Link to drive the animation of the MetaHuman facial rig.

Figure 12: Live streaming body and facial motion capture data to a MetaHuman character in Unreal Engine. Live stream viewed through tracked virtual camera.

Photogrammetry

An added feature also developed by senior technician Lee Jackson was the capacity to use photogrammetry to capture a performer’s fa- cial features and apply this to a MetaHuman character. This allowed for further potential creative investment by students in customizing their digital character design and introducing the practice of the digital double for live action actors. A 3D mesh and texture map is generated as an OBJ and PNG file respectively from nearly sixty still images of the performer’s face using Reality Capture software. These files are then imported directly into MetaHuman and integrated with a digital character. The head and facial features can then be customized using editing tools in MetaHuman.

One performance element that could not be captured was finger movement. Although an opportunity to successfully test the StretchSense motion capture glove with the body and facial cap- ture had been undertaken, this system had not been purchased. An interim solution was to at least pose the fingers appropriately so that they were not frozen in the default splayed position.

Figure 13: Photogrammetry set-up for face capture.

Figure 14: Photogrammetry data applied to MetaHuman char- acter.

Figure 15: Photogrammetry data of performer can be cus- tomized in MetaHuman to create a unique digital character that will be used for live motion capture.

Output limitations

Although it is possible to save all the output data in an Unreal Engine level file the decision was made that for the student projects only screen recordings of the live streamed real time rendered shots would be provided for editing. This created a useful creative limita- tion in that shots were essentially locked off with no opportunity for further post-production manipulation, requiring students to be invested in getting all of the in-shot elements correct at the time of capture, as with a typical live action shoot.

Creative Brief and pipeline

The challenge was to construct a brief that engaged students with all of the new and extended technical capabilities, gave them some individual creative agency, and also incorporated significant vir- tual production tools and processes. Virtual production features included a ‘live’ production shoot bringing together a live performer and virtual camera streamed into a real-time rendered Unreal En- gine scene that would also require ‘live’ changes to virtual lighting, props, virtual camera settings, simulation effects such as flames or smoke and interaction with a pre-animated character or prop, and a live ‘trigger’ element.

Mirroring industry practice around virtual production, this was significant change from previous more linear pipelines familiar to students whereby the gathering of 3D motion capture data and the sourcing and construction of 3D assets was followed by significant post-production, from motion editing to decision-making around shot choices, camera framing/movement and editing, culminating in final lighting and rendering of scenes.

By contrast using the newly developed pipeline that allowed for live-streaming live ‘on set’ elements into a real-time rendered scene transformed the pipeline balance to much greater pre-production planning time, and essentially compressing production and much of what were previously post-production components into the live capture event, with just final editing of captured shot coverage to complete after the live capture session.

A consequence of this was the need to deliver curriculum content that covered more traditional live-action planning and shooting pro- tocols that would ensure appropriate on-set shot coverage to enable choices in editing, and also consideration of the rules of continu- ity editing such as the 180-degree rule and cutting on action. The students were used to the freedoms of a 3D digital post-production environment where decisions around camera position, focal length, framing and movement could be made within environments with pre-animated and motion captured assets. Direct engagement in an on-set ‘live’ virtual production session allowed for the gaining of valuable expertise in more ‘traditional’ production planning and execution that included processes such as script breakdowns, shot lists, use of the master shot technique (or master scene method) to ensure an adequate baseline of shot coverage, and practice with practical camera operation.

Figure 16: Group virtual production capture session with per- former, virtual camera operator, and Unreal Engine operators in background.

Group vs Individual work

Another consideration was creative agency for the individual stu- dent vs. the need for group work to plan, prepare diverse assets, and execute a live capture session requiring at crew of at least a director, performer, camera operator and unreal engine operator. To address this pre-production and production was designated as group work, while the final editing would be completed individually. One useful learning outcome of this would be students witnessing diverse creative outputs from same source footage, underscoring the importance of editing as an adaptive and malleable creative tool. The group work section of the pipeline allowed for a division of labor in which students could either work to a particular skillset they had previous expertise in such as modelling and texturing assets or working with an Unreal Engine scene level, or they could take on something new. Group work also reinforced best practice around collaborative working experience in visual effects pipelines. Students were also required to document their individual pro- duction and post-production processes in video form, that also highlighted their own individual contribution to the group work, and this, along with producing an individually crafted final edit, made individualized assessment possible.

Assignment Brief

The assignment brief itself was presented as follows:

Brief: Create a short scene using motion capture and a virtual camera live-streamed into a real-time rendered Unreal Engine scene. Scene duration: 30-60 seconds

Conceive, plan, and capture a virtual production scene/sequence with the following elements:

- 1 x live motion-captured performer

- 1 x MetaHuman character with photogrammetry generated facial features

- 1 x live operated virtual camera

- 3D environment created in Unreal Engine that includes:

- Scene and prop elements appropriate to the scene narra- tive

- Lighting appropriate to the scene narrative

- 1 trigger element

- 1 simulation element (e.g., Smoke, fire, water )

- 1 pre-animated element such as a figure, creature, android

Your scene takes place in one location – but set de- tails/lighting/camera settings must show changes as the scene progresses. For example:

- Change of time of day/night

- Set/props moved around - can create the impression of a different location

- Change of camera settings - standard setting to depth of field

Scene must include:

- more than one lighting set-up

- more than one camera focal length setting

- changes to set details – g., Props, sets, scene assets

- simulation/trigger effect

Pre-production and productionWork in groups for production, then shape your own final edit in post-production.

Group work tasksGroup members can take turns in these roles, share roles, and/or distribute these roles/tasks as required:

- Director

- Camera operator

- Photogrammetry model

- Mocap performer (you can use an outside performer)

- Unreal Engine operator(s) – look after UE level project file – responsible for importing and arranging assets, lighting set-up, simulation elements, trigger element

- Asset maker(s):

- Meta-human head from photogrammetry data

- Meta human character design/customizations

- modelling/texturing environment and prop elements

- Animator for pre-animated figure/creature/element

NarrativeScene content/narrative is to be developed by the group. Conceive an original scene as a group with a beginning-middle-end structure.

Group workPhase 1: Ideation/Conception/Narrative development

- Conceive a plan a short (30-60 secs) narrative sequence to be created as a virtual production scene.

- Create a treatment, script, storyboard and animatic to develop your story idea.

- You can include alternative takes, different endings to accom- modate your group ideas, and provide some choices in the final individual edit.

Phase 2: Pre-production

- Break down your script/storyboard/animatic to plan for required

- Capture photogrammetry data for Meta-Human character

- Create required assets:

- Create UE project level for assets

- Source and customize assets from UE marketplace

- 3D modelling and texturing environment, props

- Create a digital character for mocap performer using Pho- togrammetry data from head scan of a live model integrated with a Meta-Human character

- Create and animate pre-animated element (element, figure, creature, android, machine )

- Create trigger element in UE

- Create simulation element in UE (e.g. Smoke, fire, water )

- Unreal Engine file must be approved by the Motion Capture lab technician before deployment in Mocap Studio session.

- Cast and rehearse

- Shot list for Mocap/Virtual production session – including alter- native takes.

Phase 3: Production

- Book Mocap studio

- Conduct Mocap/Virtual production

- Document production session (video documentation)

- Book back-up session if

- Collect video captures and mocap files from session

Individual workPhase 4: Post-Production

- Create individual

- Create audio

- Output finished edit of scene/sequence.

- Create ‘Making of’ video documenting production and post- production process.

Your assignment submission will include these components:

- Your completed virtual production project as a video file.

- A detailed breakdown/’making of’ video which details the production and post-production process including work-in- progress, iterations, experiments, failures, successes, mocap studio session documentation.

Marking and Feedback Criteria

- The finished work fulfils all brief and task

- All required material submitted, and tasks

- An engaging and creative response to the

- An in-depth exploration of the creative and technical possi- bilities evident.

- A productive, experimental, and exploratory work habit evi-

- Ideas developed in an innovative and imaginative manner through creative and critical

- Demonstrates required capability in a range of 3D software tools appropriate to your project

- Demonstrates the ability to execute and locate resolved work from open-ended exploration and experimentation.

- Work process comprehensively documented in online

- Work-in-progress video effectively communicates the work process from concept to final

Discussion of student project outcomes

A primary challenge in the conception, production and pre- production stages was in scaling project ambitions and creative expectations to that of an achievable scope based on the physical and technical limitations of the lab space itself and of the technical thresholds of the live streaming system, given that there is a sig- nificant data flow from tracked elements (body, facial and camera) into a real-time rendered scene.

Figure 17: Extreme close-up framing with virtual camera; Group virtual production project by Hanvit Kang, Dana Ni & Raissa Zhang.

Figure 18: High angle framing with virtual camera position offset in Unreal Engine; Group virtual production project by Hanvit Kang, Dana Ni & Raissa Zhang.

Scene action for the motion captured performer was limited to the 6 x 6 x 3 meter capture volume, so adjustments were sometimes needed to the staging of the live action performer. There was also possibility to move the alignment of the 3D environment itself in relation to the ‘safe’ capture area of the physical capture volume, so a master move control was necessary for all of the scene elements in Unreal Engine to be able to re-position so that the performer could appear to be in a different location.

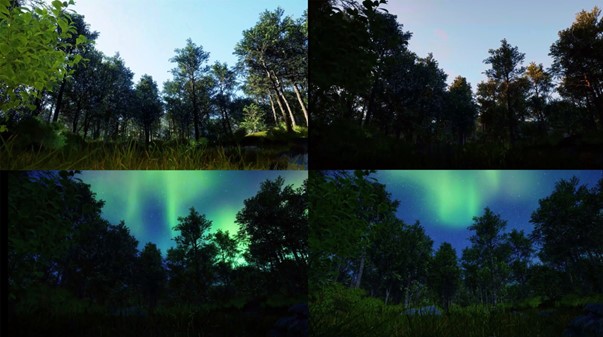

Some creative latitude was possible for the positioning of the virtual camera. For the most part the virtual camera was operated as a hand-held camera which meant shots could be at eye-level or lower. The camera position could however be offset in the Unreal Engine level, so wide shot, high angle and/or birds-eye view framing was possible and was often explored in projects to provide more creative variation in the visual storytelling. For optimal performance of any game level, managing and op- timizing the asset load and elements such as simulation effects to target an appropriate frame rate is very important. One particular component that proved challenging were MetaHuman characters that had any sort of hair simulation. Live simulations had to be turned off in favor of utilizing hair cards to avoid performance issues with the live streaming system. It was also found that ex- tra technical guidance was needed in monitoring the scenes being created by students. For examples scenes with foliage or complex lighting needed to be pre-tested in advance of a shooting/capture session to ensure frame rates were sufficient for the scene to be operational.

Figure 19: Scene with significant foliage requiring pre-testing and optimization to meet frame-rate requirements of live shoot; Group virtual production project by Gabrielle Gavin, Evelyn Alsemgeest, Nana Frimpong and Max Roach.

Figure 20: Western genre group virtual production project by Anya Hill, Jacky Alex Jones, Lucas Lam, Emily Liu, Christo- pher Maxwell, and Henry Qian.

Normally a frame rate of 60 fps would be acceptable but due to the need to employ screen-recording software (in this case OBS) a frame rate of 90 was required. The deployment of a third PC to handle a screen-recording feed would mitigate this requirement. Overall, the final edited outcomes demonstrated a diverse range of responses to the brief itself, with playful and often imaginative explorations of genre tropes such as horror, fantasy and westerns. Students also responded positively to the relative visual complex- ity possible with Unreal Engine’s rendering capabilities so textu- rally detailed environments and props and dramatically expressive lighting could also be investigated. Lighting effects were also able to be adjusted and manipulated live on-set, with students taking creative advantage of the possibil- ities of real-time rendered feedback.

Figure 21: Dramatic lighting in group virtual production project by Dana Helene Gatland, Jack Hudner, Joshua Masson, Michael Pyle and Oliver Scott.

Figure 22: ‘Live’ Time-lapse lighting effect; Group virtual pro- duction project by Gabrielle Gavin, Evelyn Alsemgeest, Nana Frimpong and Max Roach.

Figure 23: Live simulation element: fire, smoke and destruction.

Live simulation effects such as fire, smoke and destruction were also deployed as often key elements in the visual narratives. Shot coverage was good overall with awareness of the need for close-ups and point-of-view shots and shot-reverse shot cutting patterns to enhance viewer identification with characters. However some coverage was not always completely adequate, evident in some sequences that had issues with maintaining conti- nuity in cutting on action, cutting between different frame sizes, and screen direction. This was useful learning experience for students normally used to the flexibility of post-production manipulation, particularly with regard to camera placement choices in 3D envi- ronments.

Figure 24: Shot-reverse shot cutting between two characters from group virtual production project by Anya Hill, Jacky Alex Jones, Lucas Lam, Emily Liu, Christopher Maxwell, and Henry Qian.

Acknowledgement must be made also that it helps having stu- dents in final year of undergraduate study where they are relatively confident and established in a variety of digital skillsets, have experi- ence with working in groups, and an understanding of the grammar of filmic storytelling. In particular, Unreal Engine experience in at least one production group member, and general 3D animation and modelling skills overall was advantageous. An introductory virtual production course would have to look at practical strategies for getting students unfamiliar with Unreal engine and 3D animation up to speed for a production-ready situation.

Pedagogical benefits

As noted by FLEISCHER, 2020, virtual production tools such as Unreal Engine enable students to conceive, visualize, and execute cinematic ideas in real-time, fostering creativity, problem-solving, and technical skills. Also in another study by BALSAMO et al., 2021, the use of virtual production tools in educational settings was found to foster collaborative learning as students work together to manage and operate complex systems. It was also observed that such tools allow for immediate feedback, enabling students to quickly identify and rectify mistakes, thus enhancing their learning process. Virtual production tools can also simulate various filming environments and situations that would be costly or logistically challenging to recreate physically, providing students with a diverse range of practical experiences (CHEN et al., 2021). Despite these advantages, educators must also recognize the need for comprehensive training and resources to ensure the effective integration of these tools into teaching methodologies.

References

BALSAMO, D., BERTA, R., DE GLORIA, A., AND ADDIMANDO, L. 2021. Innovative Tools and Strategies for Teaching and Learning Film Studies. Journal of e-Learning and Knowledge Society, 17(2). https://doi.org/10.20368/1971-8829/1135042

BENNETT, G., AND KRUSE, J. 2015. Teaching visual storytelling for virtual production pipelines incorporating motion capture and visual effects. SIGGRAPH Asia 2015 Symposium on Education, 1–8. https://doi.org/10.1145/2818498.2818516

BLISTEIN, J. 2020. ’The Mandalorian’: See How the Planets Were Created in New Docuseries Clip. Rolling Stone. https://www.rollingstone.com/tv/tv-news/the- mandalorian-gallery-disney-plus-docuseries-994435/

CHEN, C., CHENG, L., AND CHEN, Y. 2021. A Study of Applying Virtual Reality to Experiential Learning in Film Education. Computers & Education, 161. https://doi.org/10.1016/j.compedu.2021.104064

EPIC GAMES. 2020. Virtual Production: A Frontline Report. https://www.unrealengine. com/en-US/spotlights/virtual-production-frontline-report

FARID, H., AND TORRALBA, A. 2021. How Computer Science Is Changing Movie- making. Massachusetts Institute of Technology. https://news.mit.edu/2021/how- computer-science-changing-movie-making-0526

FLEISCHER, C. 2020. Exploring Filmmaking with Unreal Engine. Journal of Media Practice and Education, 21(1), 71–85. https://doi.org/10.1080/25741136.2020.1721468

KIM, J., GU, Y., KAUTZ, J., AND THEOBALT, C. 2021. Deep Video Portraits: How AI technology is Changing Filmmaking. IEEE Transactions on Visualization and Computer Graphics. https://doi.org/10.1109/TVCG.2018.2853723

ONG, T. 2020. Epic Games CTO Kim Libreri is Preparing for a Future in Which Video Games are Unindistinguishable From Reality. The Verge. https://www.theverge.com/2020/7/16/21326091/epic-games-kim-libreri-fortnite- unreal-engine-5-interview-future-of-gaming